A few weeks ago, I was working with a client who needed quite a few transformations on their data to make it usable for visualization. Up until this point, we were primarily using Tableau on top of the flat files. Tableau can make a few basic transformations, however it struggles mightily with repeatability and any transformations beyond the basics. Tableau Prep does a nice job addressing these shortfalls, but that product is still in its infancy and only serves about 50-60% of my data transformation needs. My other data transformation tool, Alteryx, covered all the bases in terms of transformation and automation, however the cost was slightly more than my client was willing to spend. I could have used Python (Pandas package), however, it doesn’t provide enough transferability, in the need that I need to transfer the work to the client’s analysts in the future.

Enter EasyMorph.

EasyMorph is a lightweight but feature-heavy tool that enables nearly all of the transformations that I need to make data consumable and insightful - particularly for data visualization. I have only been using EasyMorph for 2 weeks, but I feel strongly about the tool. Let me explain:

What I Like

General Flow

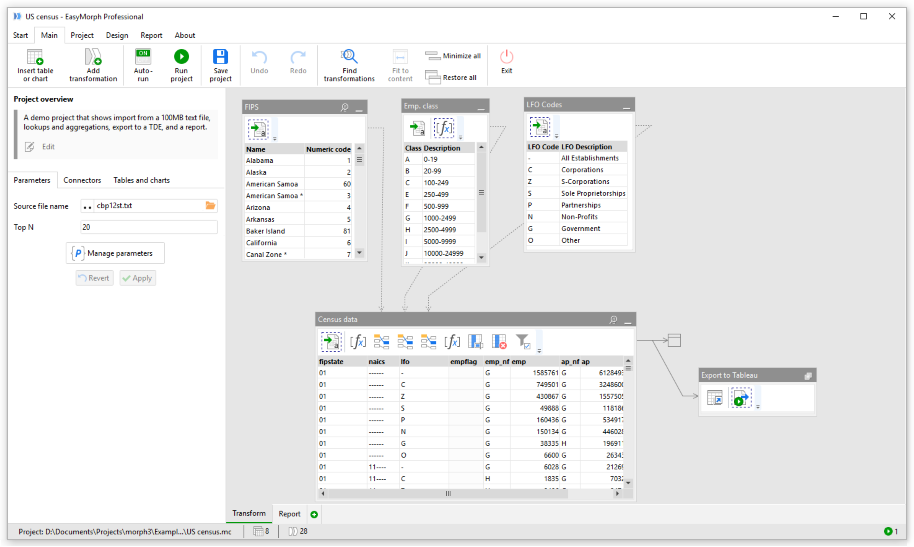

To enable solutions for my clients, I need to be able to efficiently create data workflows or “pipelines” that consume data, transform & augment it, and finally put it somewhere I can easily work with it. Alteryx has popularized the concept of “workflows” which enables most users (or really power users) to make data work. EasyMorph latches on to the concept of workflows, allowing users to start with a file / connection and work their way to a final dataset using “transformations” (Alteryx calls them “macros” or “tools”). It’s incredibly smooth and the interface, which is a “table” view concept, provides instant feedback to your changes. Here is a quick look view of a sample workflow:

Transformations

There are not a staggering number of transformations available (unlike Alteryx) but their coverage of the needs is strong. The have nearly every basic transformation that an analyst could require. They also have a lot of transformations that other tools do not, such as their powershell transformation or the “iterate” transformation (Alteryx has this but its implementation is can be complicated).

Between the data “in” and “out,” the user can apply various transformations (e.g., join data, make wide data tall), augmenting the data (e.g., create new fields based on formulas), and also clean the data (e.g., remove extraneous columns, rename certain values in a column). Further, there are quite a few data connections and export functions (i.e., export to Tableau Server).

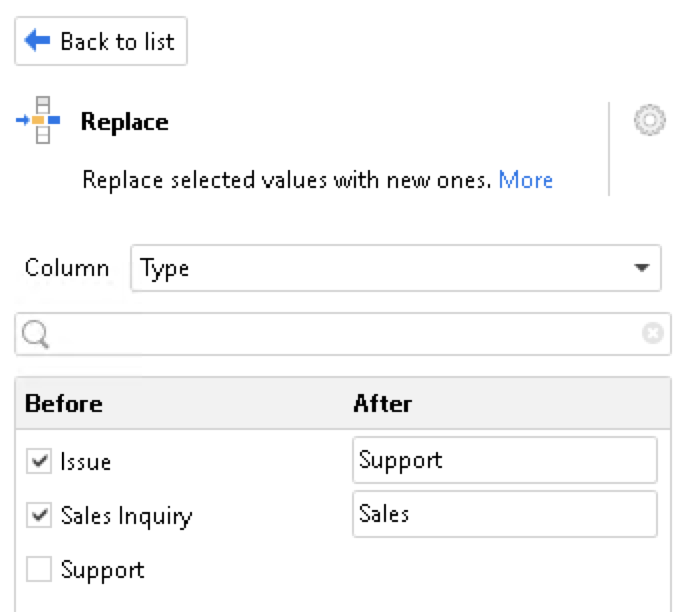

During my usage, I expected to find a limitation (some key transformation that wasn’t implemented), but have yet to encounter this. I did, however, find a lot of helpful transformations, such as “Keep Mismatching” or “Replace”. These are tools that deliver critical functions, which other tools make you jump through hoops to make work.

As a simple example, I have a field call “Call Type” that contains three (3) values: “Support”, “Issue”, and “Sales Inquiry”. I only need “Sales” and “Support”. With the “Replace” transformation, I can quickly and simply replace these two values without having to write an IF statement. Here we go:

I don’t have the time or wherewithal to go through all the transformations that I like (or why), but suffice to say that I was pleased.

Feature Set Robustness

There are a few features that EasyMorph has that others don’t do well or charge a high price for.

Documentation / Annotation

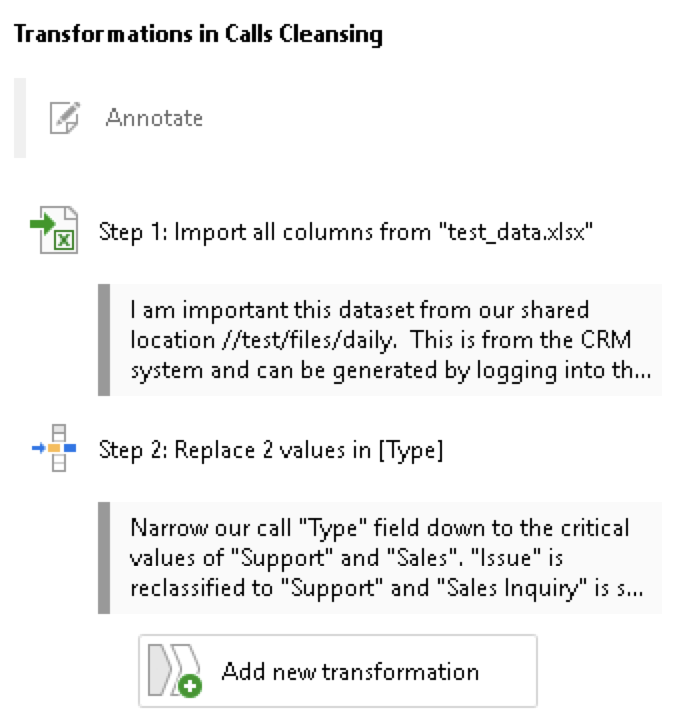

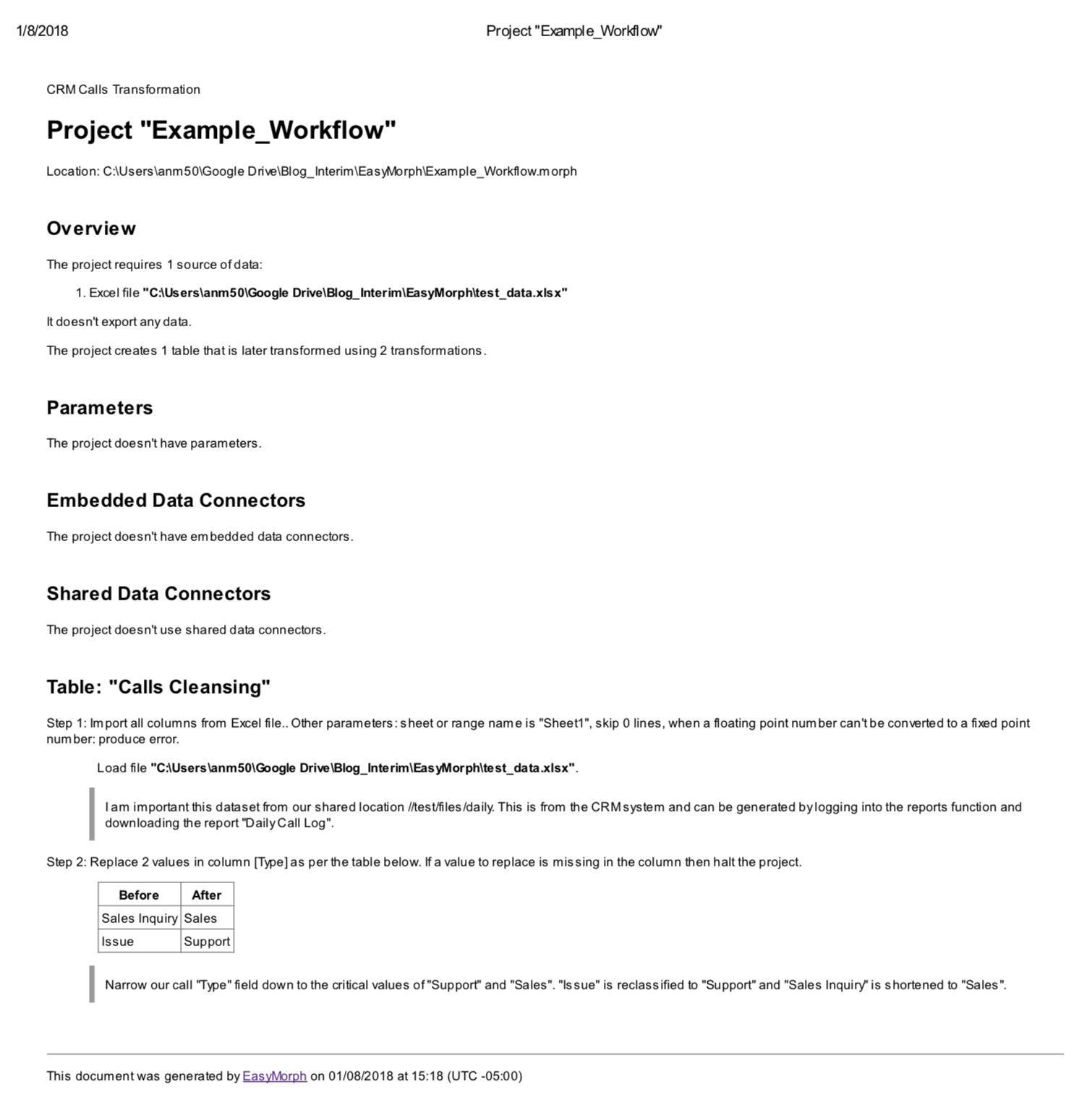

One of the most important concepts for me, and why I like these “workflow” data transformation tools, is documentation. I don’t want to maintain every workflow I build for my clients. It will get expensive for them and monotonous for me. Also, I want to build them efficient and effective solutions. This requires a level of documentation and annotation that allows any user to pickup an existing workflow and understand whats going on (and why) quickly. Alteryx implements this in the tool, allowing you to annotate every tool. It’s great for the designer, but the annotations don’t easily transfer to portable documentation. Nor does it create documentation in a standard format / template. EasyMorph has the annotate function built into every transformation, allowing you to describe your transformation in a free text field. When ready, you can easily run “Generate Documentation” for your project, which will generate an HTML document in a standard format.

Here is an example of the inline documentation:

Here is an example of the export:

Automation / Scheduling

One of the reasons we use these data transformation tools is because we want the process to be less manual and more repeatable. Gone are the days of manipulating data in excel, then trying to figure out what you did the next month to reproduce the same report!

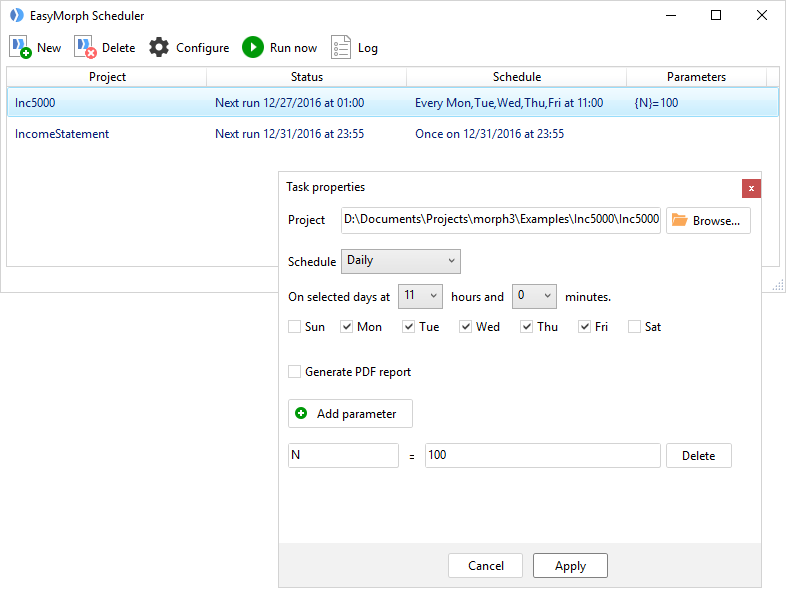

Other tools recognize that scheduling your workflows is critical and they take advantage your needs by charging exorbitant amounts for this SIMPLE feature (which aren’t your first priority when purchasing). EasyMorph includes this feature in their professional pricing (see here)!

Regardless of pricing, the feature is incredibly easy to implement and use. You don’t need to be extensively comfortable to use the command-line syntax and windows task scheduler. Further, they have a more UI friendly version is available with Scheduler. I haven’t tested, but plan to soon.

Limitless Features

There are some features that make possibilities endless with EasyMorph. In no specific order:

Overall, I am not covering these in-depth, but they are powerful features and make EasyMorph a serious contender with automation / advanced techniques with data transformation.

Developer is Responsive and Adding Features Fast

One of the fallacies of the “enterprise-ready” tools is that they have built large engineering departments with a lot of smaller teams responsible for various features. This is great for quality, documentation, and reliability. However, IMHO this comes at a cost and typically results in slower implementations of new / improved features. My assumption is that the expertise becomes much more dis-aggregated, requiring more time for those teams to understand (and test) the impact of their new / improved features with other features (teams). Not to mention the hierarchy / levels of management that are implemented to manage the teams and product development. Lastly, these tools often become attached to their large corporate customers that have all kinds of needs / restrictions that are tailored too. This is not necessarily a bad thing, but it does tend to slow down the feature iteration process.

I truly can’t speak to the inner workings / development culture at EasyMorph, but I can see that features are being improved and introduced at a very quick pace. It’s refreshing. And seeing the responsiveness from Dmitry, it seems like he is eager for feedback.

Elephant in the Room - Price

IMHO, gone are the days where large tech companies are going to win “deployments” of their tools (when demand may not be there). Take SAS for example. They were the standard a decade ago for a lot of data tasks (transformation, statistics, reporting, etc.). They really haven’t changed their model (with licensing in the thousands / tens of thousands for a single user per year - requiring companies to buy buckets of licenses) and continue to rely on their foothold in the academic community. I can’t imagine the cost of unused-licensing there is in Corporate America today.

Regardless, the truth is that users are becoming more savvy, leveraging more open-source languages like R and Python, because of their cost (zero) and their abilities (endless). Those languages have COME A LONG WAY, but they still are too advanced for the everyday analyst. These languages are still too low-level, difficult-to-use and finicky (ever had an issue with environment variables? Yeah, me too!) and this won’t soon change because they are programming languages!

We need tools that meet the needs of the everyday (or every-month) analyst which don’t consume their entire department’s budget, but also provides enough abstraction of common data prep, and provides the enterprise-features that the user’s company’s insist upon (i.e., integration with common data sources, documentation, automation, etc.).

This is where EasyMorph shines and others still have a long way to go. H

Areas for Improvement

Community

Look, it is very early for this product and I believe it will catch on. Right now, however, the community is very small. I believe that Tableau is succeeding so well because they have a robust community that users can access when they hit a speed bump. Speed bumps are the reason that users regress / quit on making their data usable. One extreme positive is that the response rate at the EasyMorph community is very quick!

I plan to be a resource there and hope that I can help keep users going on their goals of making data consumable!

Big Data

First, one must understand that “big data” has very VERY different meanings to people. I once had a client that believed that 100K rows was big data! Well, it was big to them. Typically “Big Data” is considered to be on the order of terabytes (or larger) in size and billion+ rows. Regardless, the term big data is extremely subjective.

Second, one must understand that your computers hardware, primarily RAM, is an important consideration in terms of how much data you can handle. Typically, the more RAM your computer has, the more data that EasyMorph can process. This is typically the biggest limitation, and for most people, can quickly upgraded in their machines.

For EasyMorph, when it comes to data volumes, the tool can handle datasets below 1 billion data points, which is 10 million rows by 100 columns, or 100 million rows by 10 columns. However, data of this size might require A LOT of RAM (maybe 256-512GB) to deal with such volumes, but it is still technically possible. Realistically, users have 4-16gb RAM in their machines, so 8gb of RAM might allow a user to work with a 1 million row dataset with 30 columns. That is pretty good.

Overall, EasyMorph is not a “big data” tool and has limitations, but for the average user, this is typically NOT an issue. I haven’t run into any problems on my dell laptop with 8gb of ram, and doubt I will, regardless there will be users that need to process large datasets. This is supposedly coming to an end with a new columnar-based data engine in late 2018. I look forward to this!

Operating Systems

Like I mentioned above, I don’t believe EasyMorph has a 100+ engineers that other public companies do, so this is difficult for them to achieve, but I would love to use this application on my MacBook or a linux machine.

Overall

I am surprised that EasyMorph isn’t more popular! It covers so many of my important bases with its robust feature set (including enterprise-level features), extensibility, and pricing. I’d give it high marks in each area and will be recommending the tool to my clients and network.

I plan to continue to port my data transformation workflows to EasyMorph and become a more active community member. In the meantime, check them out and let me know what you think in the comments below.

Cheers,

VizElement